Archive for category python

OWW content scraper

Posted by () in Open Notebook Science, python on October 25, 2012

I have been highly inspired by Carl Boettiger’s open notebook and his website in general. Like him, I started my open notebook in OpenWetWare. When I first started using OWW, I thought it was the most spectacular thing in the world. It was the first time I ever used a wiki and I must admit that I liked the format a lot.

One of the main reasons why I decided to not have a privately hosted open notebook like Carl’s was that I was concerned with the information dissapearing when I stopped paying for a domain. This could happen either from me not paying or from my ultimate journey to forgetfulness or death. There is a lot of good content online that I have posted that should be retained in the very least for `shits and giggles`. OWW is not my platform and I naively thought that it would persist for all time. It’s the internet right? Nothing gets thrown away on the internet…

I was told by some patent guru that things on the internet stay forever and this is why you should never post something you think you could patent. Of course, I argued against this point and used the example of Geocities—that does not exist anymore nor does a complete data dump of Geocities exist for archival purposes. Thus, I know for a fact that things on the internet will disappear at some point in time and it is up to me to ensure that I archive the things I want to keep. This idea came crashing home when OWW went dead for some time and I was left wondering if my information and notebook entries were lost. Thankfully OWW returned, however, it left me with the impression that they would not be very stable in the future. I even tweeted OWW asking if they still existed or not. I didn’t get a response.

Problems with OWW are why I moved to a wordpress.com site. WordPress has shown itself to be a sustainable—at least for the short term that I’ve used it—site that looks like it can and will be preserved for a “long” time. Of course, there is still an issue of archiving what I have posted already to WordPress but, I will cross that bridge when I come to it. Nonetheless, WordPress hasn’t randomly gone black on me yet and I like that. It doesn’t work very well as a notebook due to its blog nature but that’s only a quibble since I find it more important to get the information out than I do in what form the information takes. Yes, a nice leather bound book with hand crafted binding is preferable to a light bulb computer screen but what can you do. Plus I absolutely love getting an email when one of my students posts something. That’s spectacular and I love reading what they write.

Fighting with wordpress to do notebook things is just as bad as when I was fighting with OWW to do non-notebook things. I’ve realized that no matter what service I use as my open notebook, that service will cause me pains. Thus, I have decided that I should have my own site where I get to maintain it and build in the things that I would like for it to do. Plus, I want to instantiate my own instance of what Carl has done in his workflow with archiving notebook entries to figshare.

In order to do this, I need to scrape all the information that I posted to OWW. OWW does have a data dump for their entire wiki that I tried to parse with only my information in it. Unfortunately, this proved to be more difficult than I thought. It also meant that I needed to figure out a method for retrieving all the images that I posted to OWW somehow. So, rather than attempt to parse a huge wiki dump, I decided to scrape the information straight from the web pages I made in OWW.

I have used Python and Beautiful Soup to scrape the content from my OWW entries. I will post and describe each step I took to scrape my notebook content in the following paragraphs.

——————

Of course, there are the ubiquitous import statements.

# Import statements. import urllib2 from bs4 import BeautifulSoup import os import urlparse import urllib

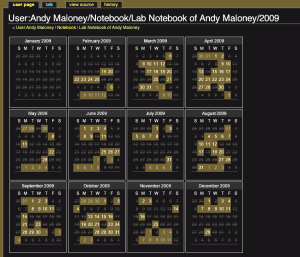

STEP 1: Get the dates for each notebook entry. The first thing to do is to get a list of all the notebook entries that I made over the years. Thankfully there is a calendar page that shows all the entries I made by year.

Looking at the source of this page shows that there is an array of all the dates I made entries. On a side note, I did not do so well when writing in my notebook. This is something that should be remedied in the future.

The below function loads the specified notebook year and searches for the array of dates in the HTML. When it finds the array, it puts the dates into a Python array. As can be seen in the above image, the dates are written as mm/dd/yyyy. The URLs are not in this format, they are in fact written like yyyy/mm/dd. In order to get the correct URL from the array, I had to reformat the date. Those dates are then used to create the notebook entry URL.

# Get the notebook entries for the specified year.

def get_notebook_entries(year):

# Read in the notebook calendar.

link = 'http://openwetware.org/wiki/User:Andy_Maloney/Notebook/Lab_Notebook_of_Andy_Maloney/' + str(year)

try:

request = urllib2.Request(link)

response = urllib2.urlopen(request)

except:

print '\nUnable to open the requested URL.'

break

page = response.read()

response.close()

# Splits each line in the HTML for easy parsing.

page_data = page.split('\n')

keep = []

# Locates the array of dates.

for i,line in enumerate(page_data):

if "new CalendarPopup('y1');" in page_data[i]\

and 'var fullDates = new Array' in page_data[i + 3]:

keep.append(page_data[i + 3]\

.replace(' var fullDates = new Array(', '')\

.replace("'", '').replace(');', '').split(','))

# Keeps only the dates notebooke entries were made.

keepers = keep[0]

keepers_ = []

# Format the date.

for item in keepers:

u = item.split('/')

v = u[2] + '/' + u[0] + '/' + u[1]

keepers_.append(v)

# Create an array with notebook entry URLs based on the dates scraped from the page.

locals()[ 'notebook_pages_' + str(year) ] = []

for element in keepers_:

u = 'http://openwetware.org/wiki/User:Andy_Maloney/Notebook/Lab_Notebook_of_Andy_Maloney/' + element

v = '/wiki/User:Andy_Maloney/Notebook/Lab_Notebook_of_Andy_Maloney/' + element

w = element

x = u, v, w

locals()[ 'notebook_pages_' + str(year) ].append(x)

return locals()[ 'notebook_pages_' + str(year) ]

Once I have a URL for all the notebook entries I made in a year, I then need to generate another function that locates all the subpages from the notebook entry. When I was using OWW I inadvertantly made it more difficult for future me to scrape this information. I did this by putting subpages in my notebook entry that was supposed to be for categorizing purposes. Oops.

This means that I need to locate the URLs on the page and scrape the information off of the subpages. The following function accomplishes this. Again, I try and open the notebook page and then I search for the URLs on that page. In order to only select the subpages that I made, the link must contain ‘/wiki/User:Andy_Maloney/Notebook/Lab_Notebook_of_Andy_Maloney/’ in it, otherwise it is rejected. The astute will note that this was baked into the notebook URL function above.

# Find subpages in notebook entries.

def get_notebook_subpages(pages, year):

locals()[ 'internal_notebook_links_' + str(year) ] = []

# Open the notebook page.

for thing in pages:

try:

request = urllib2.Request(thing[0])

response = urllib2.urlopen(request)

except:

print '\nThe page is not responding.';

print request

pass

soup = BeautifulSoup(response)

temp_links = []

subpage_links = []

# Locate all the URLs in the page and create a list of them.

for link in soup.findAll('a'):

temp_links.append(link.get('href'))

for i,item in enumerate(temp_links):

if thing[1] in str(item):

if str(item).endswith(thing[2]) == False:

u = 'http://openwetware.org' + item

subpage_links.append(u)

locals()[ 'internal_notebook_links_' + str(year) ].append(subpage_links)

return locals()[ 'internal_notebook_links_' + str(year) ]

Now for the real reason as to why I made my own scraper: images. I could have used an already cooked scraper from someone else but, I wouldn’t have been able to get the full size images of items I stored on OWW. In order to do that, I built another function that finds the image URLs and downloads them. I guess I should have kept all my original images and posts in a better format, something more suited for archival purposes. Oh well. Big lesson learned here.

Again, I open the page and locate all the images on it. I also filter out some of the images since I don’t need or want them. I then reformat the image URLs in order to download the high resolution images. This part wasn’t so easy to figure out unfortunately. I believe that it was difficult due to the way the image files were structured in OWW’s database. Instead of having the images under my username, they went to random folders like images/b/b4/my_image.jpg. Nonetheless, the below function will get the images from the page for me.

def get_images(link, path):

# Open the page.

try:

request = urllib2.Request(link)

response = urllib2.urlopen(request)

except:

pass

soup = BeautifulSoup(response)

# Locate all the images on the page.

page_images = soup.findAll('img')

page_image_links = []

# Remove images that are not essential to the final output.

for image in page_images:

page_image_link = urlparse.urljoin(link, image['src'])

if 'somerights20.png' in page_image_link\

or 'poweredby_mediawiki_88x31.png' in page_image_link\

or '88x31_JoinOWW.png' in page_image_link\

or 'Random.png' in page_image_link:

continue

else:

page_image_links.append(page_image_link)

high_res_links = []

image_path = []

# Create the high resolution image links.

for link in page_image_links:

if 'thumb/' in link:

high_res_link = 'http://www.openwetware.org' + '/'.join(urlparse.urlsplit(link)\

.path.replace('thumb/', '').split('/')[:-1])

else:

high_res_link = 'http://www.openwetware.org' + '/'.join(urlparse.urlsplit(link)\

.path.replace('thumb/', '').split('/'))

high_res_links.append(high_res_link)

image_name = high_res_link.split('/')[-1]

outpath = os.path.join(path, image_name)

image_path.append(outpath)

urllib.urlretrieve(high_res_link, outpath)

return image_path

Here I call the functions I wrote above in order to scrape the URLs and save them to files on my computer.

notebook_pages_2009 = get_notebook_entries(2009) notebook_subpages_2009 = get_notebook_subpages(notebook_pages_2009, 2009) notebook_pages_2010 = get_notebook_entries(2010) notebook_subpages_2010 = get_notebook_subpages(notebook_pages_2010, 2010) notebook_pages_2011 = get_notebook_entries(2011) notebook_subpages_2011 = get_notebook_subpages(notebook_pages_2011, 2011)

My next step is to save the HTML to files. I actually only take content between the ‘<!– start content –>’ and ‘<!– end content –>’ comments. This is one reason why those images I skipped over aren’t needed in the final scrape. I do this for both the notbeook page and its assiciated subpages.

path = '/save/file/path'

# Get the beginning page content for the notebook entry.

no_response = []

for i,item in enumerate(notebook_pages_2009):

year = '2009/'

date = notebook_pages_2009[i][2].replace('/', '_')

os.makedirs(path + year + date)

new_path = path + year + date + '/'

try:

request = urllib2.Request(item[0])

response = urllib2.urlopen(request)

except:

no_response.append(item[0])

page = response.read()

response.close()

page_data = page.split('\n')

out_html = []

for j,line in enumerate(page_data):

if '<!-- start content -->' in line:

for sub_line in page_data[j:]:

if '<!-- end content -->' in sub_line:

break

out_html.append(sub_line)

# Soup to eat.

soup = BeautifulSoup(''.join(out_html))

image_path = get_images(item[0], new_path)

# Change all image paths in the parsed html.

try:

for j,thing in enumerate(soup.findAll('img')):

if image_path[j].split('/')[-1] in str(soup.findAll('img')[j]):

thing['src'] = image_path[j]

except:

pass

filename = item[0].split('/')[-1]

f = open(new_path + filename + '.html', 'w')

# Soup to save.

f.write(soup.prettify('utf-16'))

f.close()

# Get the subpages of the notebook.

for i,item in enumerate(notebook_subpages_2009):

year = '2009/'

date = notebook_pages_2009[i][2].replace('/', '_')

new_path = path + year + date + '/'

for thing in item:

new_folder = thing.split('/')[-1]

os.mkdir(new_path + new_folder)

new_folder_path = new_path + new_folder + '/'

try:

request = urllib2.Request(thing)

response = urllib2.urlopen(request)

except:

no_response.append(thing)

page = response.read()

response.close()

page_data = page.split('\n')

out_html = []

for k,line in enumerate(page_data):

if '<!-- start content -->' in line:

for sub_line in page_data[k:]:

if '<!-- end content -->' in sub_line:

break

out_html.append(sub_line)

# Soup to eat.

soup = BeautifulSoup(''.join(out_html))

image_path = get_images(thing, new_folder_path)

# Change all image paths in the parsed html.

try:

for j,thingy in enumerate(soup.findAll('img')):

if image_path[j].split('/')[-1] in str(soup.findAll('img')[j]):

thingy['src'] = image_path[j]

except:

pass

f = open(new_folder_path + new_folder + '.html', 'w')

# Soup to save.

f.write(soup.prettify('utf-16'))

f.close()

This works failry well although I have had some timeouts which caused me to have to rerun some things. Also, I completely forgot about YouTube and Google Docs content. I’ll have to figure that one out. The good news is that I now have nicely formated HTML notebook entries from this scrape and they are in date stamped folders. I also think I should get the talk pages on the wiki although I’m pretty sure I don’t have very many of them.

This was a great learning experience for me. I’m sure there are easier ways to do it and better ways to catch exceptions but, this is the way I did it pulling from my knowledge base.

Lesson in python and keeping my mouth shut

I was asked to write a factorial function—on the spot in a pressurized situation—as a challenge and guess what, I couldn’t do it. This is a simple thing to do and it unfortunately took me a bit of time away from the pressure to accomplish it. I’ve never been asked to replicate a function that someone already wrote a library for. As such, I was caught way off guard and unfortunately got crazy defensive saying things like “I would never do something like that, it already exists”. Double wrong thing to say. This is undoubtably a side effect of me being a novice. I will make no claims to being a proficient programmer…yet. You better believe that is going to change. Unfortunately I failed miserably and in such a spectacular manner that it was comical.

At the time, I didn’t know about some simple tools that probably everyone on the planet that knows how to (or took classes to learn how to) program already knows about. I knew about the += command that adds the item from a loop in python but, I didn’t know that there was an analog *=. Turns out, there is and I made a complete ass of myself for not knowing about it. Lesson learned—I need to start doing programming homework problems I can find online. Nonetheless, here is my first attempt at the function. I’m writing this mainly because it is cathartic to write and I need to own my mistake.

def fact(n): result = 1 for item in n: result *= item return result

Of course, there are other things to add to the definition above such as checks to ensure that the list n is what you are expecting it to be. If it is a single number, then that needs to be handled.

def fact(n): result = 1 if type(n) != list: result = n elif type(n) == list: for item in n: result *= item return result

If the input is 0, then that has to be handled as well.

def fact(n): result = 1 if n == 0 or 0.: result = 1 elif type(n) != list: result = n elif type(n) == list: for item in n: result *= item return result

And finally if a zero occurs in the list, one has to handle that. I did it by filtering them out.

def fact(n): result = 1 if n == 0 or 0.: result = 1 elif type(n) != list: result = n elif type(n) == list: temp = [ item for item in n if item > 0 ] for item in temp: result *= item return result

While this works, it is too little too late for my pressurized situation. I need to just keep practicing. I’m sure there are more elegant and easier ways to do what I did above but, it is the first thing I got to work properly.

hahaha

I’m still an idiot as the above code is completely wrong. Not for what it does, but because it is not a factorial. Oh well, still lessons learning. I’m happy to wear this mistake like a badge of honor. At any rate, this is correct.

def fact(n): result = 1 if type(n) != int: print 'Error, fact takes an integer.' nList = [ x for x in range(1,n+1) ] if n == 0: result = 1 for item in nList: result *= item return result